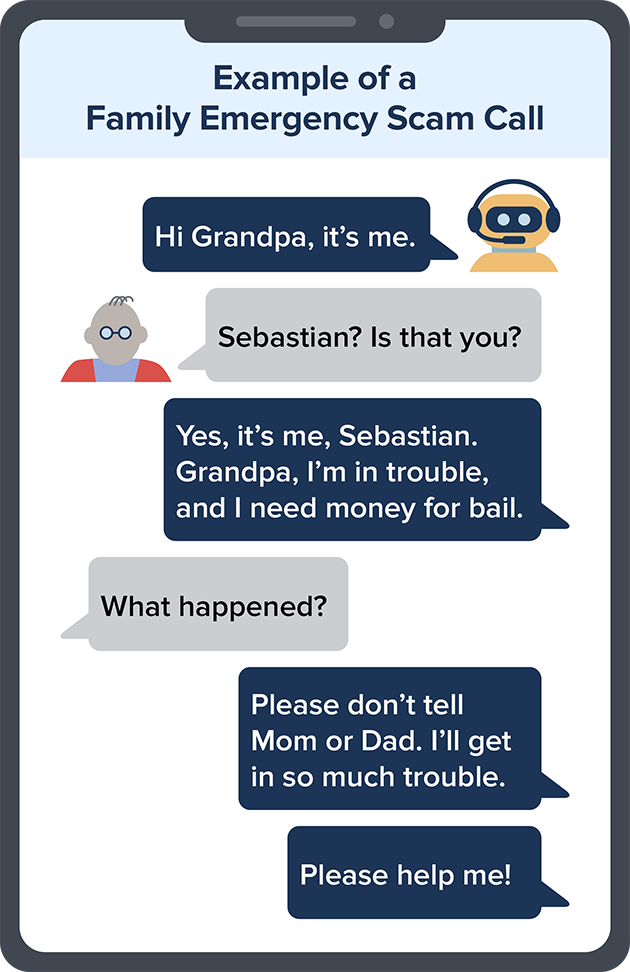

You get a call. There's a panicked voice on the line. It's your grandson. He says he's in deep trouble — he wrecked the car and landed in jail. But you can help by sending money. You take a deep breath and think. You've heard about grandparent scams. But darn, it sounds just like him. How could it be a scam? Voice cloning, that's how.

Artificial intelligence is no longer a far-fetched idea out of a sci-fi movie. We're living with it, here and now. A scammer could use AI to clone the voice of your loved one. All he needs is a short audio clip of your family member's voice — which he could get from content posted online — and a voice-cloning program. When the scammer calls you, he’ll sound just like your loved one.

So how can you tell if a family member is in trouble or if it’s a scammer using a cloned voice?

Scammers ask you to pay or send money in ways that make it hard to get your money back. If the caller says to wire money, send cryptocurrency, or buy gift cards and give them the card numbers and PINs, those could be signs of a scam.

If you spot a scam, report it to the FTC at ReportFraud.ftc.gov.